The Five P's Framework: How to Pick Winning AI Use Cases with Kyle Norton

Welcome to the AI Barfed Series! This is our third post in the series. Today, we’re sharing the use case selection methodology showcased by Kyle Norton during AI Barfed.

Kyle Norton has made exactly zero bad AI bets at Owner.com.

Not because he’s got some crystal ball or insider access to the latest models. It’s simpler than that: Kyle never picks AI tools. He picks problems.

As CRO at Owner.com, Kyle spoke at our AI Barfed in September about something that’s been driving growth leaders absolutely insane: why do most AI experiments fail to deliver real business impact?

His answer cut through all the vendor noise: it’s not the technology that’s failing. It’s the selection process.

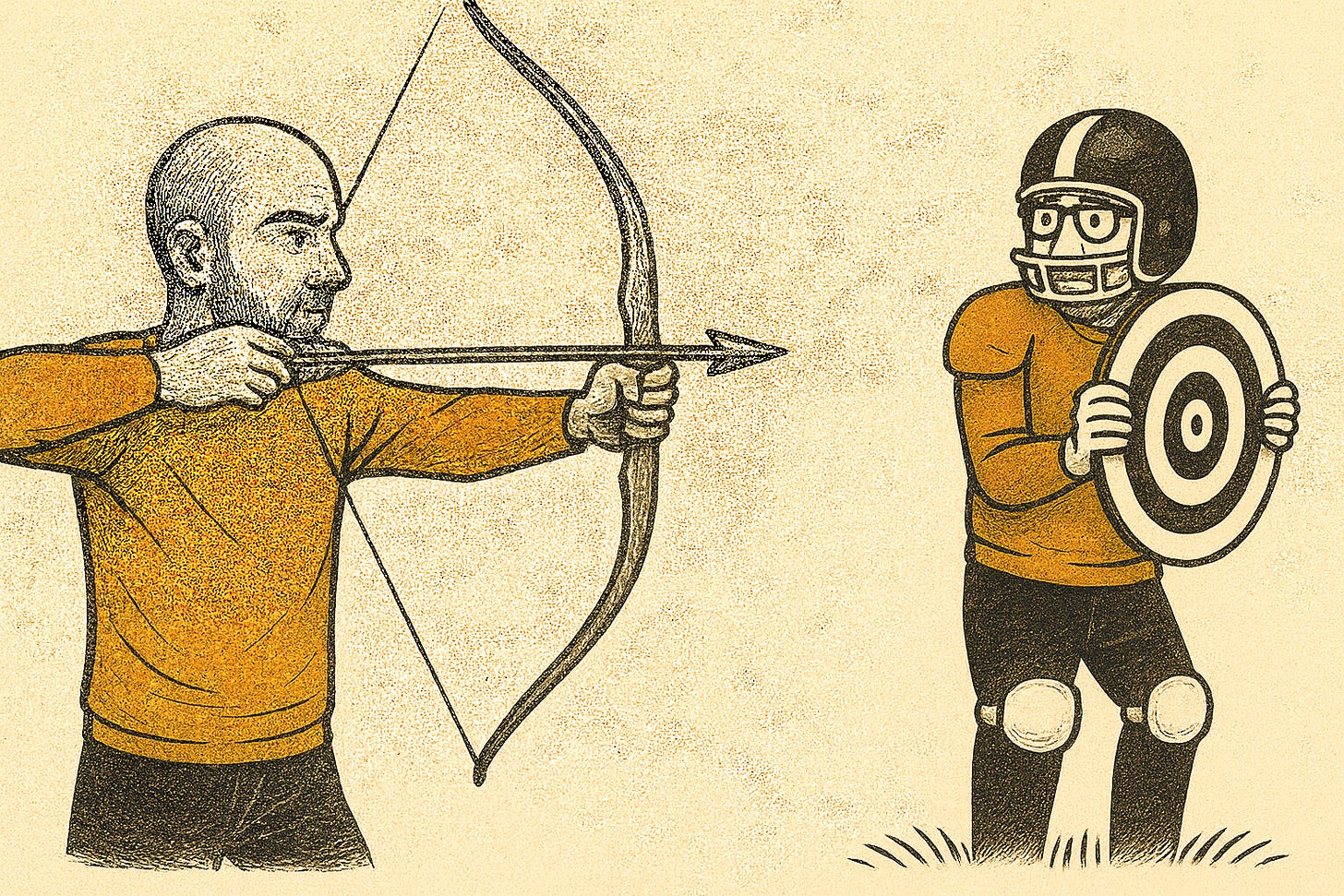

The Random Experimentation Trap

Here’s what Kyle sees happening at most companies:

Someone hears about a cool AI tool from a friend. An investor sends over an “interesting” demo. A LinkedIn message catches your eye at precisely the wrong moment. So you launch an experiment.

Then another one. And another.

None of it connects to your actual business priorities. You’re splitting attention across too many initiatives. You never put enough critical mass behind any single thing to fully embed it in your workflows. And because nothing is important enough to sustain focus, you keep switching.

The result? Your team develops AI fatigue.

“As soon as you lose the team, and as soon as they’re going to tune out those investments, you’re in a really, really tough spot,” Kyle told our audience. “Because you’re doing things too randomly, and there’s not enough centralized investment and attention behind anything, then you don’t really learn properly.”

The technology might work. But if your sales team is thinking “well, Kyle already told me XYZ tool was going to be good, and then we stopped talking about that 60 days later, and now there’s this new tool...” you’ve lost them.

And once you lose your frontline team, you’ve guaranteed that your next experiment will fail too… and the next, and the next.

Kyle’s framework flips the typical selection process. Instead of starting with “what cool AI tools should we try?”, he starts with “what are our biggest business problems?”

This isn’t just semantic. It fundamentally changes how you evaluate opportunities.

Kyle uses what he calls the Four P’s Framework, borrowed and adapted from Annie Duke’s work in “How to Decide” and “Thinking in Bets.” During our conversation, we added a fifth P to make the implicit diagnostic phase explicit, because we kept circling back to it: before you can evaluate possibilities, you need to identify Problems first.

So let’s call it the Five P’s Framework, starting with that crucial first step:

You need to identify your most significant business problems first. Not the things that would be “nice to have.” The things that are actually keeping you from hitting your goals this quarter.

At Owner.com, those might be: we have a pipeline problem, we’re behind on our headcount plan, or deal quality is suffering.

Once you’ve identified those core problems, then you start mapping possibilities. And this is where Kyle introduces another framework: the Opportunity Solution Tree, developed by Teresa Torres.

“Oftentimes, people will bring you an opportunity and have maybe a solution in mind that they’ve jumped to,” Kyle explained. “But really, we want to go back up this opportunity tree.”

This is the consulting version of root cause analysis. The Kaizen “five whys.” Whatever problem-solving framework you prefer, the point is to dig deeper.

Example: We have a hiring gap.

Why? We don’t have enough qualified people making it to the case study stage.

Why? Is it because people are dropping out of the process, or are we not putting enough people at the top of the funnel?

Why? We post our roles but don’t do any outbound sourcing, and our employer brand isn’t strong enough.

Now you’re getting somewhere. Now you can start mapping out possibilities that actually solve root causes instead of symptoms.

The Five P’s: Expected Value Meets Reality

Once you’ve done the diagnostic work and mapped your opportunity landscape, Kyle evaluates potential projects using four core dimensions (plus that crucial first P - Problems - that we just covered):

1. Possibilities

What are all the different ways we could solve this problem?

Not just AI solutions. All solutions. Because sometimes the answer isn’t a new tool at all.

If your core problem is that hiring sprint, your possibilities include: AI-powered outreach to candidates, building simulation environments where candidates can practice your frameworks, improving your employer brand content, hiring a recruiter, or changing your compensation structure.

Lay out the entire landscape before narrowing down.

2. Payoff

If this bet pays off, what’s the actual impact?

Kyle pushes his team to think in terms of outlier upside versus incremental improvement. Too many companies work on incremental bets because they feel safer.

“There’s too much incrementality in what gets prioritized,” Kyle said. “You’re trying to understand; is this a 5% increase in pipeline? Or is this a 150% increase in pipeline?”

This is where most teams get lazy. They’ll say “this will improve efficiency” or “this will help our reps be more productive.” Kyle wants numbers. Specific, defensible projections of business impact.

3. Probability

What’s the likelihood this actually works?

This is where you need to be brutally honest. Some bets are proven: you’ve seen competitors do it successfully, or you have clear data suggesting it will work in your market.

Other bets are speculative. Kyle gave an example of a significant investment Owner.com made in AI-powered website education. “The upside to that bet is big enough that we took it on,” he explained, even though the probability was uncertain because “we sell to mom and pop restaurant owners” who might not want to engage with AI in the buying process.

But that speculation was acceptable because of the payoff potential.

4. Perspiration

What’s the total effort required, including from other teams?

This is the trap that kills most projects. You might think “oh, this is just my director working on it for a month.” But then you realize you also need product marketing to make this one of their top three priorities. And you need rev ops to build new dashboards. And you need enablement to create training.

Suddenly, your “one person, one month” project requires coordination across four teams and becomes a nightmare of a bottleneck.

Kyle is explicit: map out every team dependency before you commit. The perspiration isn’t just your team’s effort; it’s the total organizational load.

5. Prioritization

Now you do the math.

Payoff × Probability = Expected Value

Expected Value ÷ Perspiration = Your Priority Score

This is a classic EV (expected value) calculation, adjusted for effort. Sometimes you’ll work through this framework and discover that none of your high-payoff bets have a reasonable probability given current resources. That’s not a failure; that’s the framework working. It’s telling you to go hunting for different problems where the math works better.

What This Changes About AI Experimentation

Here’s what Kyle’s framework does that most AI selection processes don’t:

It creates alignment before you start. Because you’re starting with company priorities and working through the five P’s with stakeholders, everyone understands why this experiment matters and what success looks like.

It prevents random experimentation. You can’t just chase shiny objects when you’ve got a formal evaluation showing that Project A has 10x the expected value of Project B.

It sets clear success criteria. When you’ve mapped payoff and probability explicitly, you know exactly what data you need to collect to determine if the experiment worked.

It respects your team’s capacity. By forcing honest accounting of perspiration - including the time of other teams - you stop overcommitting and burning people out.

It builds trust for future experiments. When you’re rigorous about selection, and you communicate clearly about why you chose this bet over others, your team knows you’re not just chasing trends.

The Missing Piece: Change Management

Here’s where Kyle dropped the most important insight of the entire session:

“It’s way more about change management and cultural transformation than it is the technical implementation.”

You can have the best AI tool in the world. You can have perfect technical implementation. But if your team doesn’t adopt it, you’ve got nothing.

Kyle sees this constantly: “The technology is much less important than the adoption is. And I think that there will be a significant competitive advantage for companies that are really great at change management.” We agree with Kyle on this front; thus, our mantra of ‘Tooling is for Tools’.

His principles for driving adoption:

Foundation Before Flash

Everyone wants to do flashy, sophisticated stuff. But Kyle’s approach is to start with use cases that make reps’ lives easier. Build trust first. Show them AI can help them, not replace them.

Integrate, Don’t Add Surfaces

The AI tools Kyle is most skeptical of are those that require reps to live in yet another application. “We want you to live in Salesforce and Sales Loft and your inbox and Slack today, and we want you to also live in this new place that’s going to give you your sales playbook...”

No. Find tools that embed in your existing stack.

Enable The Rollout

Kyle’s team uses a “traffic light methodology” for different levels of change:

Green light changes: Easy changes that don’t require enablement team involvement

Yellow light changes: More significant, requiring Slack announcements, updated documentation, and reinforcement in team meetings

Red light changes: Complex changes requiring formal training, certifications, and multiple touchpoints

Most companies are “criminally under-resourced when it comes to enablement and rev ops,” according to Kyle. If you want to make rapid, transformative changes to your company, you need those functions to be fully staffed.

The Competitive Advantage Hiding In Plain Sight

Here’s what Kyle understands that most growth leaders miss: the competitive advantage in AI doesn’t come from having access to better models. Everyone has access to the same models.

The advantage comes from being exceptional at selecting the right problems to solve and excellent at getting your team to adopt solutions.

“There will be a significant competitive advantage for companies that are really great at change management and can help deploy new things to their frontline teams,” Kyle said.

That’s it. That’s the whole game.

You can have the most sophisticated AI stack in your industry. But if you picked the wrong problems to solve, or if your team won’t use the tools, you’ve got nothing.

Kyle’s framework gives you both: a rigorous process for picking the right bets, and a methodology for ensuring your team actually adopts them.

The companies that master both? They’re going to move faster, learn faster, and compound their advantages faster than everyone else stuck in the random experimentation trap.

Kyle Norton is Chief Revenue Officer at Owner.com, where he’s responsible for go-to-market strategy and execution. Before Owner.com, Kyle held senior revenue roles at high-growth B2B companies and has become known for his systematic approach to AI adoption and change management.

This post is based on Kyle’s session at AI Barfed in September 2025, where he shared his frameworks for selecting AI use cases with GTM leaders.

Appreciate the feature!

Gold as always between you two guys ;